Agents

Agent types

four basic kinds of agent programs will be discussed:

- simple reflex agents

- model-based reflex agents

- goal-based agents

- utility-based agents all of these can be turned into learning agents

Table-lookup agent (bad)

drawbacks:

- huge table → memory constraints

- takes a long time to build the table

- no autotomy

- no agent could ever learn all the right table entries from it’s experience

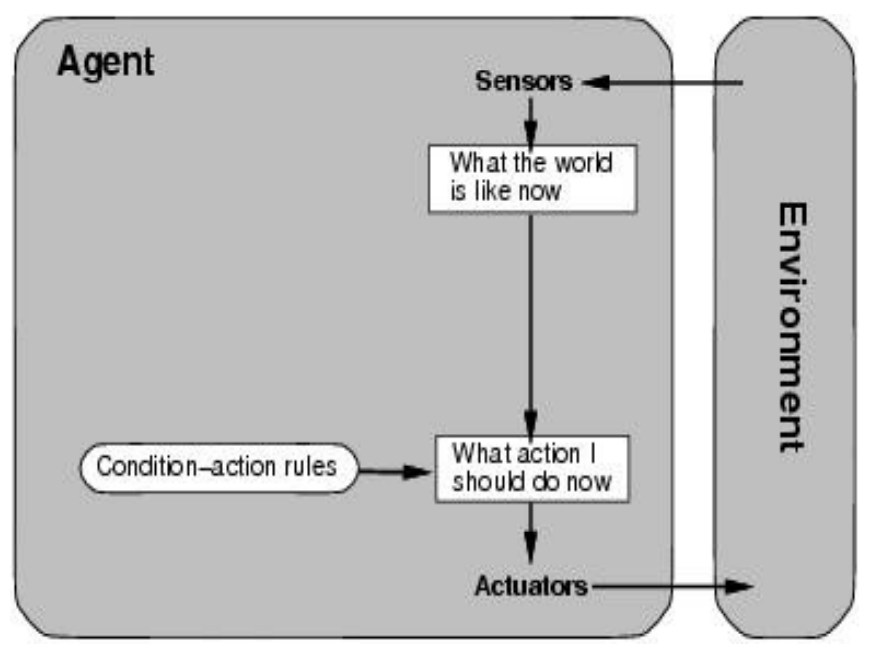

Simple reflex agent

Simple reflex agent: rectangles indicate the current internal state of the agent’s decision process, and ovals represent the background information used in the process.

- select action based on the current precept

- large reduction in possible percept/action situations

- implemented through condition-action rules

- e.g. if dirty then suck (vacuum)

Pseudocode:

function SIMPLE-REFLEX-AGENT(percept) returns an action

static: rules, a set of condition-action rules

state <- INTERPRET-INPUT(percept)

rule <- RULE-MATCH(state, rule)

action <- RULE-ACTION[rule]

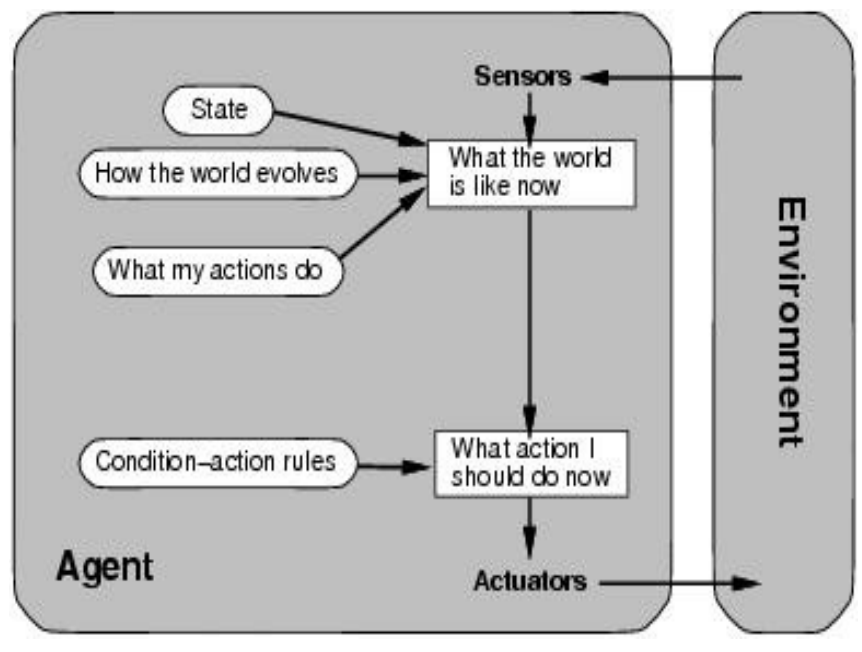

return actionModel-based reflex agent

- used to tackle partially observable environments

- maintain internal state that depends on the percept history

- e.g. agent keeps track of the part of the world it can’t see now (has history)

- keep history of how the world changes, how do agent actions affect the world → create model of the world

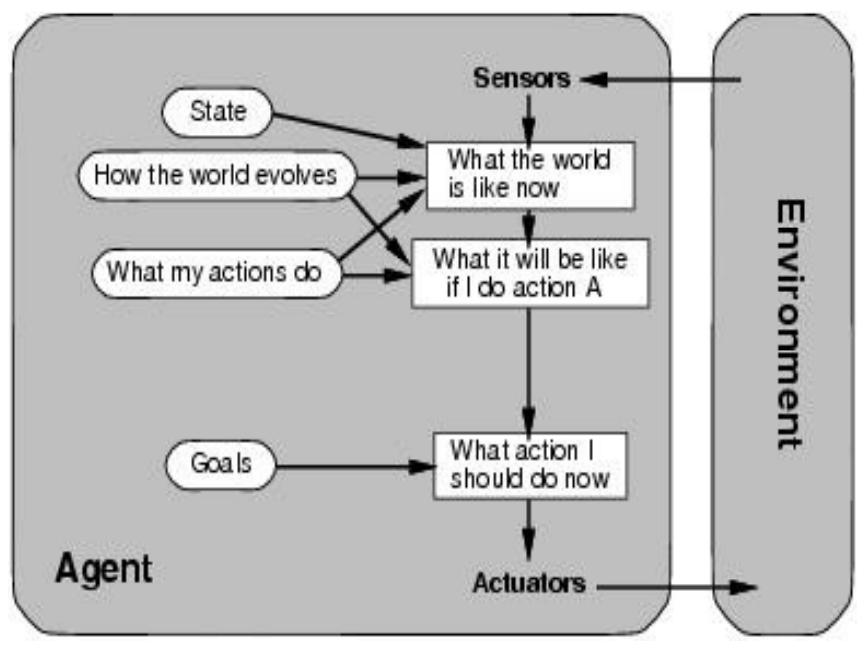

Goal-based agent

A model-based, goal-based agent. It keeps track of the world state as well as a set of goals it is trying to achieve, and chooses an action that will (eventually) lead to the achievement of its goals

- the agent needs a goal to know which situations are desirable

- things become difficult when long sequences of actions are required to find the goal

- typically investigated/used in search and planning research

- major difference: the future is taken into account

- is more flexible since knowledge is represented explicitly and can be manipulated

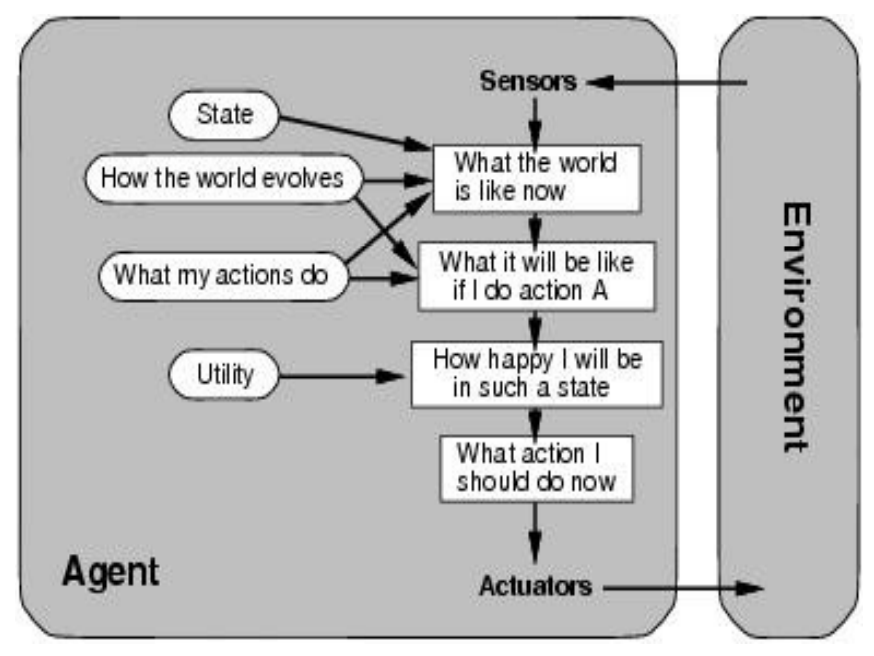

Utility-based agent

A model-based, utility-based agent. It uses a model of the world, along with a utility function that measures its preferences among states of the world.

- certain goals can be reached in different ways

- some are better; have a higher utility

- utility function maps a (sequence of) state(s) onto a real number

- improves on goals:

- selecting between conflicting goals

- select appropriately between several goals based on likelihood of success

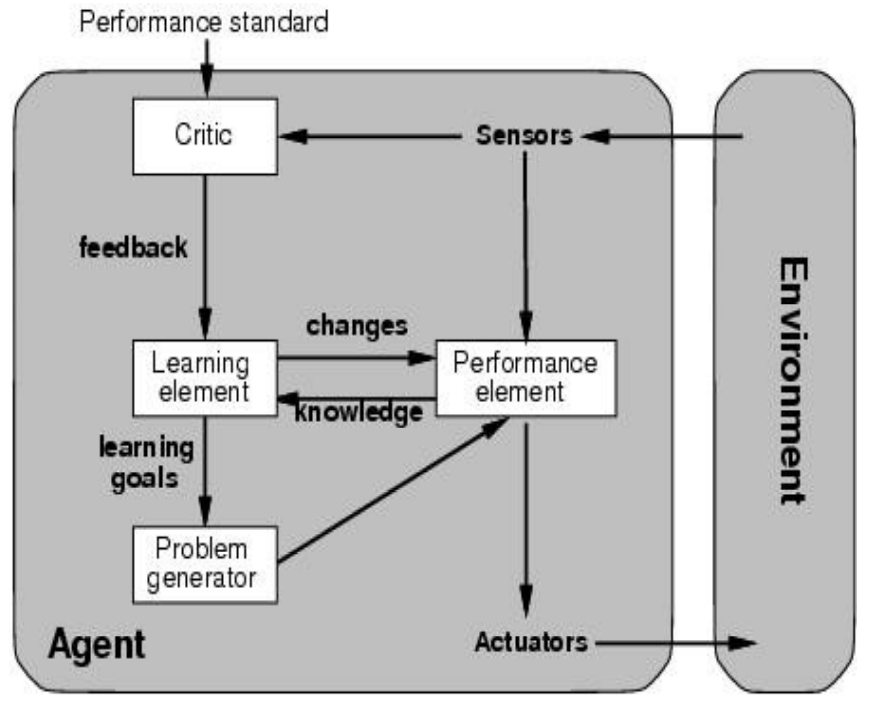

Learning agent

- all previous agent-programs describe methods for selecting actions, previous agent programs:

- do not explain the origin of these programs

- learning mechanisms can be used to perform the task

- teach them instead of instructing them

- advantage is the robustness of the program toward initially unknown environments

- Learning element: introduce improvements in performance element

- critic provides feedback on agent performance based on fixed performance standard

- Performance element: selecting actions based on precepts

- corresponds to the previous agent programs

- Problem generator: suggests actions that will lead to new and informative experiences

- exploration vs exploitation

Supervised & unsupervised learning

Supervised

- “there is a teacher that says yes or no”

- training data comes with an expected outcome

Unsupervised

- is only given the training data → no expected outcome

- e.g. creating an agent that learns to classify sutff

- classifies in it’s own way

Solving problems by searching

essentially BFS & DFS