Performance

Why aren’t parallel applications scalable?

- sequential performance

- critical paths (dependencies between computations spread across processors)

- bottlenecks (one processor holds things up)

- algorithmic overhead (some things just take more effort to do in parallel)

- communication overhead (spending increasing proportion of time on communication)

- load imbalance (makes all processors wait for the slowest one, dynamic behaviour)

- speculative loss (do A and B in parallel, but B is ultimately not needed → wasted work from speculative execution)

What is the maximum parallelism possible?

- depends on application, algorithm, program → data dependencies in execution

- MaxPar:

- analyzes the earliest possible “time” any data can be computed

- assumes a simple model for time it takes to execute instruction or go to memory

- result is the maximum parallelism available

Embarrassingly parallel computations

- no or very little communication between processes

- each process can do its tasks without any interaction with other processes

Calculating with Monte Carlo

- place a circle inside a square box with side of (2cm)

- the ratio of the circle area to the square area is

- randomly choose a number of points in the square → for each point , determine if is inside the circle

- the ratio of points in the circle to points in the square will give approximation of

Analytical / theoretical techniques

- involves simple algebraic formulas and ratios

- typical variables: data size (), number of processors (), machine constants

- to model performance of individual operations, components, algorithms in terms of the above

- be careful to characterize variations across processors

- model them with max operators

- constants are important in practice

- use asymptotic analysis carefully

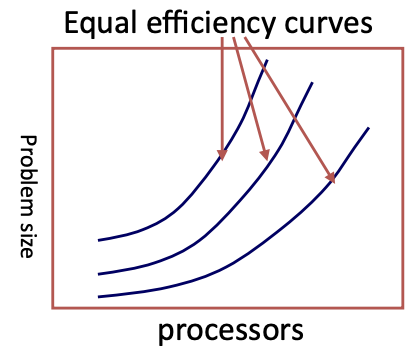

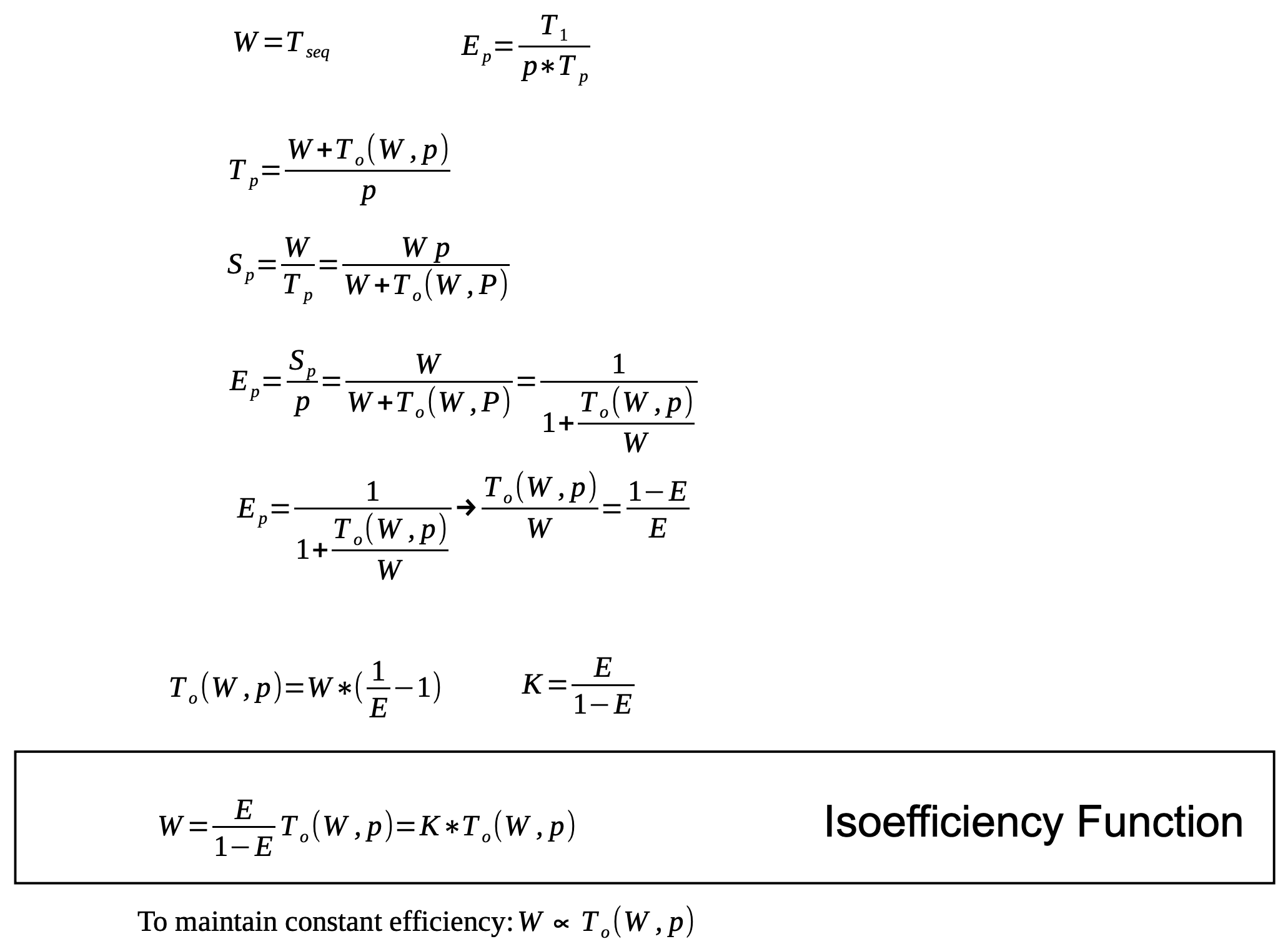

Isoefficiency

- goal is to quantify scalability

- how much increase in problem size is needed to retain the same efficiency on a larger machine?

- efficiency:

- isoefficiency:

- equation for equal-efficiency curves

- if no solution → problem is not scalable in the sense defined by isoefficiency

- how the problem size () must increase with the number of processors () to keep the efficiency () constant

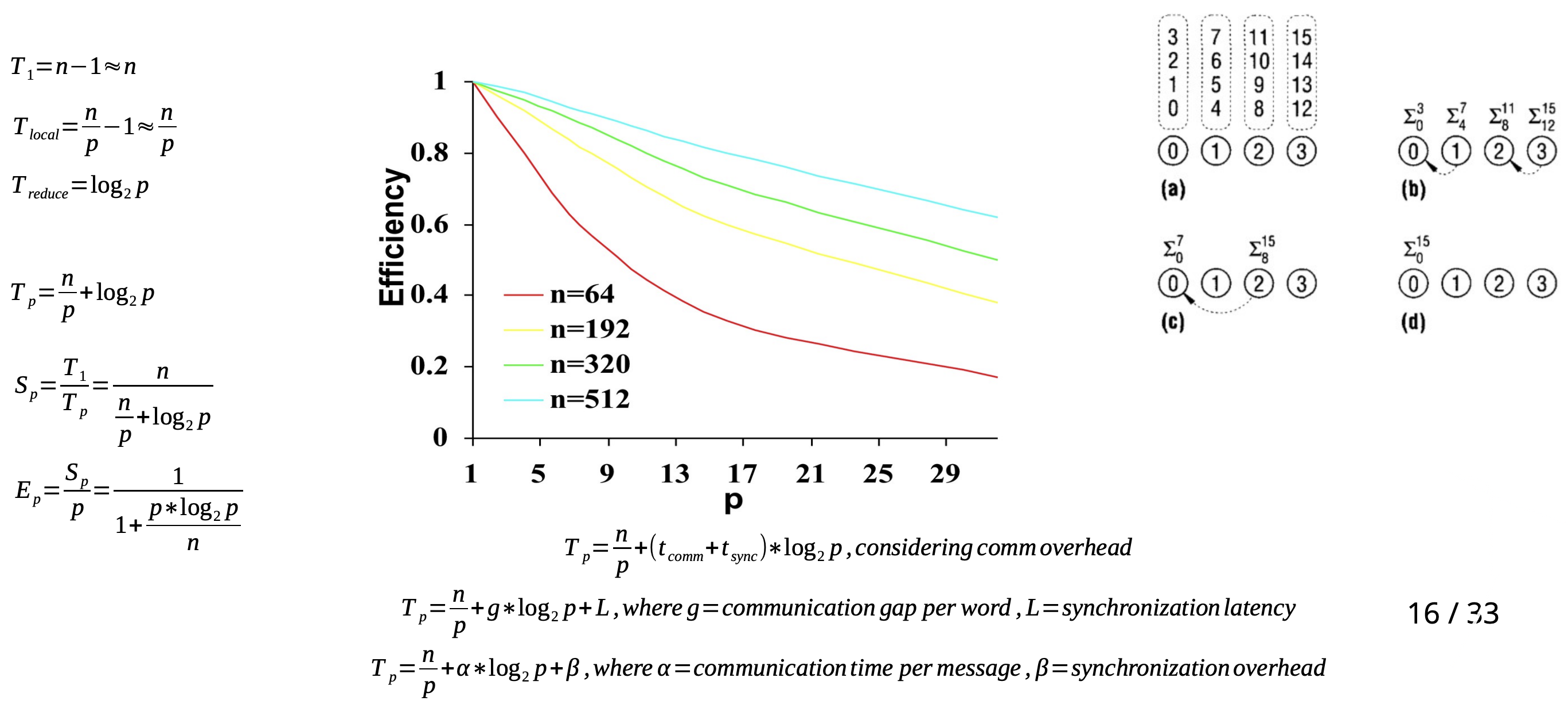

Scalability of adding numbers

- scalability of a parallel system is a measure of its capacity to increase speedup with more processors

- adding numbers ( sums) on processors with strip partition

Problem size and overhead

- informally, problem size is expressed as a parameter of the input size

- a consistent definition of the size of the problem is the total number of basic operations () → also refere to problem size as work ()

- overhead of a parallel system () is defined as the part of the cost not in the best serial algorithm

- denoted by , it is a function of and

- ( includes overhead)

Isoefficiency function

- with fixed efficiency, as a function of

Isoefficiency function of adding numbers

- overhead function:

- isoefficiency function:

- if doubles, needs to also be doubled to roughly maintain the same efficiency

- isoefficiency functions can be more difficult to express for more complex algorithms

More complex isoefficiency functions

- a typical overhead function can have several distinct terms of different orders of magnitude with respect to both and

- we can balance against each term of and compute the respective isoefficiency functions for individual terms

- keep only the term that requires the highest grow rate with respect to

→ my notes end at slide 20

take notes on rest of slides

l4b.pdffinished